According to the headlines, AI is either going to cure cancer or terminate the human species. Meanwhile, on X there’s an unending shouting match between people with animé avatars and those with laser eyes.

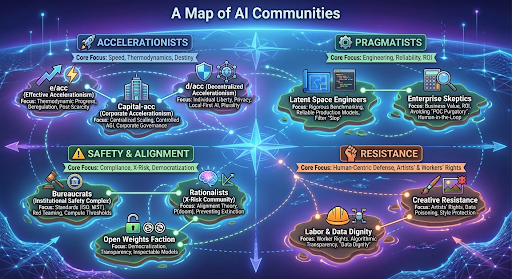

It is easy to tune this out as noise. But these aren’t just internet fights. They are serious philosophical disagreements about the most powerful technology (potentially) humans have ever created. I began my search for AI communities expecting to find two simple ones: “Techno-Optimists” vs. “Doomers”. What I found was at least 10 different views of AI with their own goals and buzzwords. Here is an overview.

The Accelerationists: Thermodynamics, Destiny, and Speed

The “Accelerationist” movement believes that stalling technological progress is a moral failing. In this group you will find ideological purists (e/acc), corporate scalers (Capital-Acc), and decentralized defenders (d/acc).

1. Effective Accelerationism (e/acc)

Perspective & Goals: An “e/acc” views intelligence as a physical force that reduces entropy. Their goal is to accelerate the growth of intelligence to reach a post-scarcity, multi-planetary future. They oppose regulation, viewing it as “deceleration” that stifles the natural evolutionary process of the universe.

Lexicon:

- “Thermodynamic Destiny”: The belief that the universe “wants” to create intelligence to process energy more efficiently.

- “Decel” (slur): Short for “decelerationist.” Someone who advocates for safety, regulation, or pausing AI development. Often equated with stagnation or “doomerism”.

- “Extropian”: A reference to the 1990s philosophy of unbounded human expansion; e/acc views itself as the spiritual successor.

- “Let it rip”: The colloquial motto for releasing models immediately without “paternalistic” safety filters.

Key Hubs:

- Beff’s Newsletter (Guillaume Verdon): The central philosophical text of the movement. https://beff.substack.com

- eu/acc: The European branch focusing on deregulation in the EU. https://euacc.com

- Beff Jezos on X: The primary account for the movement’s founder. https://twitter.com/BasedBeffJezos

2. Corporate Accelerationism (Capital-Acc)

Perspective & Goals: Represented by OpenAI and Google, this group seeks centralized acceleration. They believe AGI (Artificial General Intelligence) is too dangerous for the public to possess directly (“open weights”) and must be controlled by responsible, regulated institutions.

Lexicon:

- “Responsible Scaling”: The euphemism for “moving fast, but only within a walled garden”.

- “Stargate”: The massive supercomputer clusters (often $100B+) required to train next-generation models, implying only nation-states or mega-corps can compete.

- “Model Collapse”: The fear that training AI on AI-generated data will degrade quality, used to justify the need for proprietary, high-quality human data.

Key Hubs:

- OpenAI Global Affairs: The policy arm pushing for “democratic AI” via centralized infrastructure. https://openai.com/global-affairs

- Anthropic Research: The home of “Constitutional AI,” blending acceleration with corporate safety. https://www.anthropic.com

3. Decentralized Accelerationism (d/acc)

Perspective & Goals: A “defensive” alternative proposed by Vitalik Buterin. They prioritize technologies that protect individual liberty (privacy, encryption, local defense) against centralized power. They want AI running on your laptop, not a corporate server.

Lexicon:

- “Defensive Acceleration”: Prioritizing defense (cybersecurity, biosecurity) over offense.

- “Local-First AI”: Running models on consumer hardware (Edge AI) to ensure privacy and independence from the cloud.

- “Plurality”: A governance concept emphasizing diverse, decentralized decision-making rather than a single “aligned” AI.

Key Hubs:

- Vitalik Buterin’s Blog: The foundational texts for d/acc. https://vitalik.eth.limo

- MIT Decentralized AI Summit: A key academic forum for this movement. https://media.mit.edu/events/mit-decentralized-ai-summit

The Pragmatist Guilds: Engineering & Realism

This community consists of the builders (engineers, CTOs, and data scientists) who have moved past the “magic” phase. They are deeply skeptical of AGI hype and focus on reliability and cost.

1. The “Latent Space” Engineering Community

Perspective & Goals: These are the “AI Engineers.” They reject “vibes-based” evaluation in favor of rigorous benchmarks. They are focused on making stochastic models behave reliably in production.

Lexicon:

- “Vibe Coding”: A pejorative term for coding by guessing prompts until it “feels” right; they prefer deterministic engineering.

- “Slop”: Low-quality, AI-generated content (text or images) that floods the internet; engineers aim to filter this out.

- “Wrapper”: A dismissive term for a startup that is merely a thin user interface over OpenAI’s API, lacking defensive moats.

- “Jevons Paradox of Code”: The observation that making coding cheaper (via AI) has increased, not decreased, the demand for software engineers.

Key Hubs:

- Latent Space (Podcast & Discord): The “town square” for the AI Engineer. https://www.latent.space

- Simon Willison’s Weblog: The definitive blog for skeptical, practical AI engineering. https://simonwillison.net

- Interconnects (Nathan Lambert): Technical analysis of open models and training dynamics. https://www.interconnects.ai

2. The Enterprise Skeptics

Perspective & Goals: CTOs and CIOs focused on ROI. They are wary of “Science Projects” that look cool but deliver no business value.

Lexicon:

- “POC Purgatory”: The state where an AI project works in a demo (Proof of Concept) but fails to scale to production due to cost or hallucinations.

- “Jagged Frontier”: The concept that AI is superhuman at some tasks and incompetent at others, often unpredictably (coined by Ethan Mollick).

- “Human-in-the-Loop”: The mandatory requirement that a human verifies AI output before it is used.

Key Hubs:

- One Useful Thing (Ethan Mollick): A resource for evidence-based AI management. https://www.oneusefulthing.org

The Safety and Alignment Archipelago

The “Safety” community has professionalized. It is no longer just philosophers on forums; it is now bureaucrats in government agencies.

1. The Institutional Safety Complex (The Bureaucrats)

Perspective & Goals: Government agencies (US AISI, EU AI Act enforcers) viewing safety as compliance. Their goal is to establish standards (ISO, NIST) for model release.

Lexicon:

- “Red Teaming”: The process of hiring hackers/experts to attack a model to find flaws before release.

- “System Cards”: The “nutrition label” for an AI model, detailing its capabilities and safety tests.

- “Compute Threshold”: The specific amount of computing power (e.g., 10^36 FLOPS) that triggers government oversight.

Key Hubs:

- US AI Safety Institute (NIST): The operational hub for US AI regulation. https://www.nist.gov/isi

2. The X‑Risk / Rationalist Community (The Remnant)

Perspective & Goals: The original “Doomers” who fear superintelligence will cause extinction. They focus on Alignment Theory: ensuring an AI’s internal goals match human survival.

Lexicon:

- “P(doom)”: The probability an individual assigns to AI causing human extinction. “What’s your P(doom)?” is a standard greeting.

- “Shoggoth”: A meme depicting LLMs as Lovecraftian monsters wearing a smiley face mask (the mask is the “RLHF” safety training).

- “Orthogonality Thesis”: The belief that an AI can be super-intelligent but have trivial or dangerous goals (like making paperclips).

Key Hubs:

- LessWrong: The original forum for Rationalist and X‑Risk discussion. https://www.lesswrong.com

- Alignment Forum: High-level technical research on AI safety. https://www.alignmentforum.org

3. The “Open Weights” Faction (The Democratizers)

Perspective & Goals: Researchers who believe “Safety through Obscurity” is dangerous. They fight for the right to download and inspect model weights.

Lexicon:

- “GPU Poor”: A badge of honor for researchers working with limited compute, optimizing models to run on consumer hardware.

- “Open Weights” vs “Open Source”: A crucial distinction. “Open Weights” means you can download the model; “Open Source” means you have the training data and code too. They push for the latter.

Key Hubs:

- EleutherAI: A grassroots collective for open-source AI research. https://www.eleuther.ai

- Hugging Face: The “GitHub” of the open AI community. https://huggingface.co

The Resistance Front: Human-Centric Defense

A coalition of artists and labor unions fighting the economic and cultural encroachment of AI.

1. The Creative Resistance

Perspective & Goals: Artists who view Generative AI as theft. They develop technical tools to “poison” training data to protect their style.

Lexicon:

- “Style Mimicry”: The specific harm of an AI copying a living artist’s unique visual style.

- “Data Poisoning”: Intentionally corrupting images so AI models cannot learn from them (e.g., Nightshade).

- “Scraping”: The act of taking data from the web without consent; viewed here as “looting”.

Key Hubs:

- Glaze / Nightshade Project: The University of Chicago team building defense tools. https://glaze.cs.uchicago.edu

- EGAIR (European Guild for AI Regulation): A lobbying group for artists’ rights. https://www.egair.eu

2. Labor & Data Dignity

Perspective & Goals: Unions fighting “Algorithmic Management.” They demand transparency on how AI is used to hire, fire, and monitor workers.

Lexicon:

- “Bossware”: Surveillance software used to track remote workers’ productivity.

- “Data Dignity”: The concept that workers own the data they generate and should be paid for it.

Key Hubs:

- UC Berkeley Labor Center: Research on AI’s impact on workers. https://laborcenter.berkeley.edu

Conclusion: Navigating the Maze

Clearly the AI debate isn’t really about AI. It’s about power, trust, speed, control, and our vision for the future. The danger is that with so many strong views, AI conversations can easily collapse into shouting matches. So as we talk to others about AI, we need to be ready to listen and be cognizant of their concerns.