by Robert Bruce, TCG Deputy CTO

In 2025, we’re past novelty. We’re entering a phase where using AI well is a professional skill that any developer must have to meet the expectations of clients and employers. Whether it’s GitHub Copilot or a few well-aimed prompts into ChatGPT, AI is already writing code in today’s government projects. But writing code faster is not the same as delivering value, so how do agencies fit AI into a project’s architecture, compliance model, and team structure?

In this two part post, we answer that question.

Part 1 describes the AI best practices to improve outputs, including test driven deployment, code reviews, prompt engineering and documentation.

Part 2, coming next week, describes how to use AI in balancing small teams, ensuring quality is not sacrificed for speed, practicing the principle of trust but verify, and more.

Part 1: Best Practices

The following best practices are tactics that help smaller teams deliver high-quality, secure, and compliant systems for our customers:

Test Driven Deployment

Test-driven development has always required teams to define requirements clearly. But when working with AI, it becomes even more valuable. Imagine you’re building an API to handle leave requests. You write a comprehensive set of unit tests upfront — business hours only, no overlapping dates, supervisor approval logic. Then you use AI to scaffold the code that makes those tests pass. The output is fast, sure, but more importantly, it’s anchored. The tests act as a contract, and the AI simply becomes a way to deliver against it. The AI isn’t doing the thinking, it’s implementing the spec.

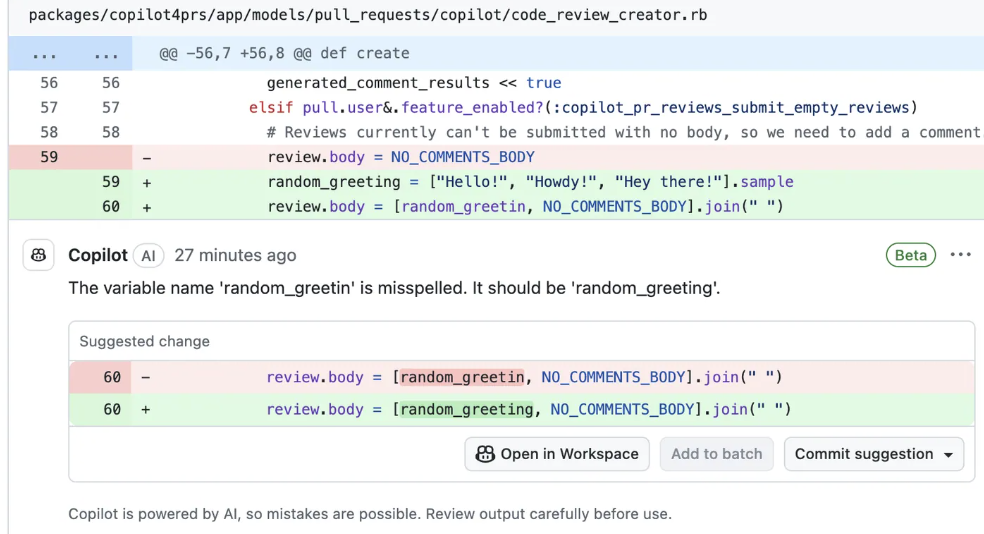

Code reviews

The benefits of AI become even more apparent when reviewing and refining code. In a typical workflow, a developer opens a pull request, and before a teammate even gets to it, tools like SonarQube (with GitHub PR integration) or GitHub CoPilot (with PR review capabilities) have already flagged bugs, vulnerabilities, code smells or gaps in test coverage. The developer sees actionable feedback in minutes, not days, and in some cases it can come with proposed changes that can simply be accepted. That feedback isn’t perfect, but it catches the obvious issues early and removes a layer of back-and-forth.

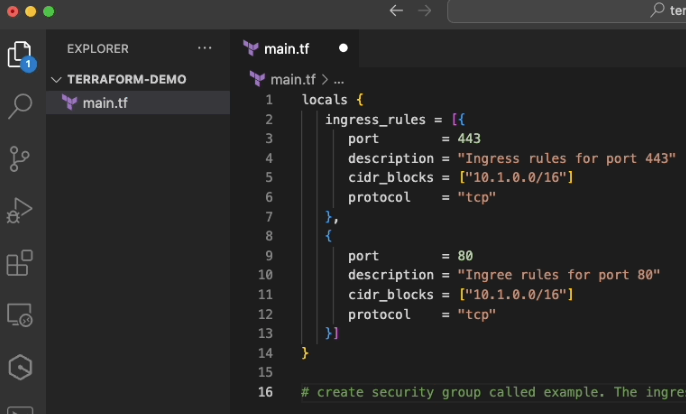

Code verification

This is where DevSecOps and compliance-aligned practices step in. For example, a team deploying infrastructure-as-code to AWS GovCloud might use Amazon CodeWhisperer to generate Terraform scripts. Those scripts don’t go live immediately. Instead, they run through policy checks, static scanning, and containerized testing before ever touching the production environment. If the AI output fails to encrypt a resource or misconfigures IAM roles, the pipeline halts. Again, in this setup AI isn’t a shortcut, it’s a first draft, automatically subjected to the same standards you’d apply to a human’s work.

Prompt Engineering

Prompt engineering has also emerged as a foundational skill. This isn’t throwing darts randomly, hoping to hit a useful number. Prompt engineering centers on knowing how to speak precisely to extract usable results. Consider a scenario where a developer needs to build a secure file upload endpoint. A prompt like “create a secure upload API” will yield something vague or overly permissive. But prompting with “create a Flask endpoint that limits file size, scans file headers for MIME type, writes to an encrypted S3 bucket, and logs uploads to CloudWatch” gets you something far closer to production quality. Effective prompts can function like shared infrastructure — teams who document and standardize them across projects develop an internal language for working with AI.

Documentation

That communication doesn’t end at the prompt. Documentation is just as important. In regulated environments, AI-generated code must be auditable. Who generated it? What prompt was used? What changes were made after review? What tests validate it? Treating AI interactions like any other system artifact makes it easier to secure Authority to Operate (ATO), answer auditors, and maintain trust across stakeholders. Prompt history and AI output logs can be treated as part of system documentation, stored in version control alongside design diagrams and requirements.

Coming Soon: Part 2