By Robert Buccigrossi, TCG CTO

By Robert Buccigrossi, TCG CTO

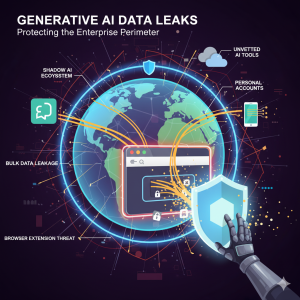

The rush to integrate Generative AI has created a vast, uncontrolled ecosystem within most organizations. At a recent CSA webinar, “Preventing GenAI Data Leakage: Enabling Innovation Without Compromising Security”, security experts from Layer X provided a pragmatic look at the tangible data leakage risks this new reality presents. They discussed the insecure behaviors and overlooked channels already exposing corporate data. Here are the essential facts and lessons for any technical team navigating this landscape.

Lesson 1: Enterprise AI is a Shadow Ecosystem

The Reality:

Despite official policies, enterprise AI usage is overwhelmingly uncontrolled and invisible. The actual usage patterns reveal a “shadow AI” environment where the vast majority of activity occurs outside of sanctioned, monitored channels. This isn’t a minor compliance issue; it’s the default operational state.

The Risk:

When employees use personal accounts for work, organizations lose all visibility and control. Data entered into these free, consumer-grade tools may be used for model training, is stored indefinitely outside the organization’s perimeter, and is not subject to corporate security agreements. This creates a massive blind spot where sensitive data is regularly exfiltrated with no audit trail.

The Proof:

- Layer X research shows that 89% of enterprise AI usage is invisible to the organization.

- Over 71% of all connections to GenAI tools are made using personal, non-corporate accounts. When corporate accounts are used, nearly 60% are still not federated through SSO, leaving them unmonitored.

- The market isn’t fragmented. ChatGPT alone accounts for over 50% of enterprise usage, and the top five tools represent 86% of all activity. However, a long tail of dozens of specialized, unvetted tools accounts for the rest, each one a potential leakage point.

Lesson 2: Data Leakage Happens in Bulk, Not Just in Prompts

The Reality:

While security policies often focus on the content of individual text prompts, the most significant data loss events occur through bulk data-handling activities that are common in technical workflows.

The Risk:

Developers are prime users of GenAI for tasks like code generation, debugging, and analysis. The common practice of copy-pasting large blocks of source code into a public LLM directly exposes proprietary algorithms and intellectual property. Similarly, business users in finance or HR upload entire documents (spreadsheets, reports) containing sensitive PII, financial data, and customer information to leverage the model’s analytical power, creating major compliance and security risks.

The Proof:

- In one of the most well-known examples of this risk, engineers at Samsung pasted confidential source code and internal meeting notes directly into ChatGPT. This led to the sensitive data being absorbed by a third-party, prompting the company to temporarily ban the tool and build its own internal AI.

Lesson 3: The Browser Extension is a High-Privilege Threat Vector

The Reality:

AI-powered browser extensions offer productivity shortcuts but operate as one of the most underestimated and pervasive security risks. They run with extensive permissions, often silently in the background, with continuous access to nearly everything a user sees and does in the browser.

The Risk:

These extensions are not passive tools; they actively read, modify, and exfiltrate data from web pages. A compromised or malicious AI extension can access credentials, session tokens, and sensitive corporate data from other SaaS applications. Because most endpoint security tools are completely blind to the browser’s internal activity, this threat surface is largely unmonitored.

The Proof:

- According to Layer X data, 1 in 5 enterprise users has an AI-powered browser extension installed.

- Of those extensions, nearly 60% operate with “high” or “critical” permissions, giving them extensive access to browser data and credentials. Another report on enterprise browser extension security found a nearly identical figure, noting 58% of extensions request high-risk permissions.

Lesson 4: The “Block Everything” Strategy is Untenable

The Reality:

Faced with unknown risks, many organizations have defaulted to banning GenAI tools outright. While seemingly safe, this approach is a temporary and ultimately failing strategy that ignores user behavior and business necessity.

The Risk:

An outright ban doesn’t stop usage; it just drives it further into the shadows. Developers and other employees under pressure to deliver will find workarounds, using personal devices and unsanctioned tools to do their jobs. This reactive posture stifles innovation and ensures that the AI usage that inevitably happens is completely invisible and insecure.

The Proof:

- A 2024 Cisco study found that over one in four (27%) organizations have completely banned the use of Generative AI. The same study noted that employees aren’t waiting for permission, with 48% admitting to entering non-public company information into these tools.

- Entire nations have attempted this, with Italy temporarily banning ChatGPT in March 2023 over data privacy concerns related to GDPR. The ban was quickly lifted once the provider made concessions, demonstrating that engagement, not prohibition, is the long-term path forward.

Lesson 5: The Browser is the Logical Point of Control

The Reality:

The common denominator across web-based AI tools, embedded AI in SaaS, and browser extensions is the browser itself. It is the universal interface where data is entered, displayed, and transferred, making it the most effective place for monitoring and enforcement.

The Risk:

Traditional security tools like network firewalls or CASBs lack the necessary context to secure AI usage. They operate out-of-band and are often blind to the specific user activities within a web session, such as copy-pasting sensitive data or sharing a conversation. Without browser-level visibility, security teams cannot distinguish between safe and risky behavior.

The Proof:

- An effective AI security strategy requires granular, real-time controls. This includes identifying and auditing all AI usage, enforcing the use of corporate accounts, and providing real-time, in-context education to users as they interact with AI tools.

- For high-risk activities, the ability to actively block or redact the upload of sensitive data (via file upload or copy-paste) is critical. These actions can only be reliably intercepted and controlled from within the browser, making a browser-based security approach a technical necessity for enabling AI safely.

At TCG, we’ve already taken steps with approved tools like Gemini and librechat.tcg.com, and clear IT Rules of Behavior, but it’s up to each of us to use them wisely. By staying informed, choosing secure channels, and avoiding risky shortcuts, we protect our customers and our company while still harnessing the benefits of AI.